Much of what Google does with AI scares people, and they will want something done.

With an AI here and AI there, here an AI there an AI everywhere an AI, Old McGoogle has a new farm. One it has been working on for a long time and still isn't finished with. Making computers in secret server rooms teach themselves how to act like people. Notice I said act and not think; AI and Machine Learning aren't really intelligent or learn the way we think of when we see those words. But those computers are able to take the information given to them and find other ways it can be used, and that makes them seem sentient. Scary stuff.

AI isn't ready to kill all humans, but can it ever get there? People will have questions.

Now I'm not saying this is where the machines start to out think humanity and take over. That's fiction best left for movie directors and writers to have some fun with. But smarter computers can do things like make it easier for someone to drain your bank account or trick your smart front door lock into opening. Those are things that can be done now and better smarter computers will make it easier, which means more people can do it. Reality here is a bit concerning. Go a step further and to the average person who isn't really hyped on AI and Machine Learning will be afraid that those computers can do more.

More: What's new in AI at Google I/O 2018

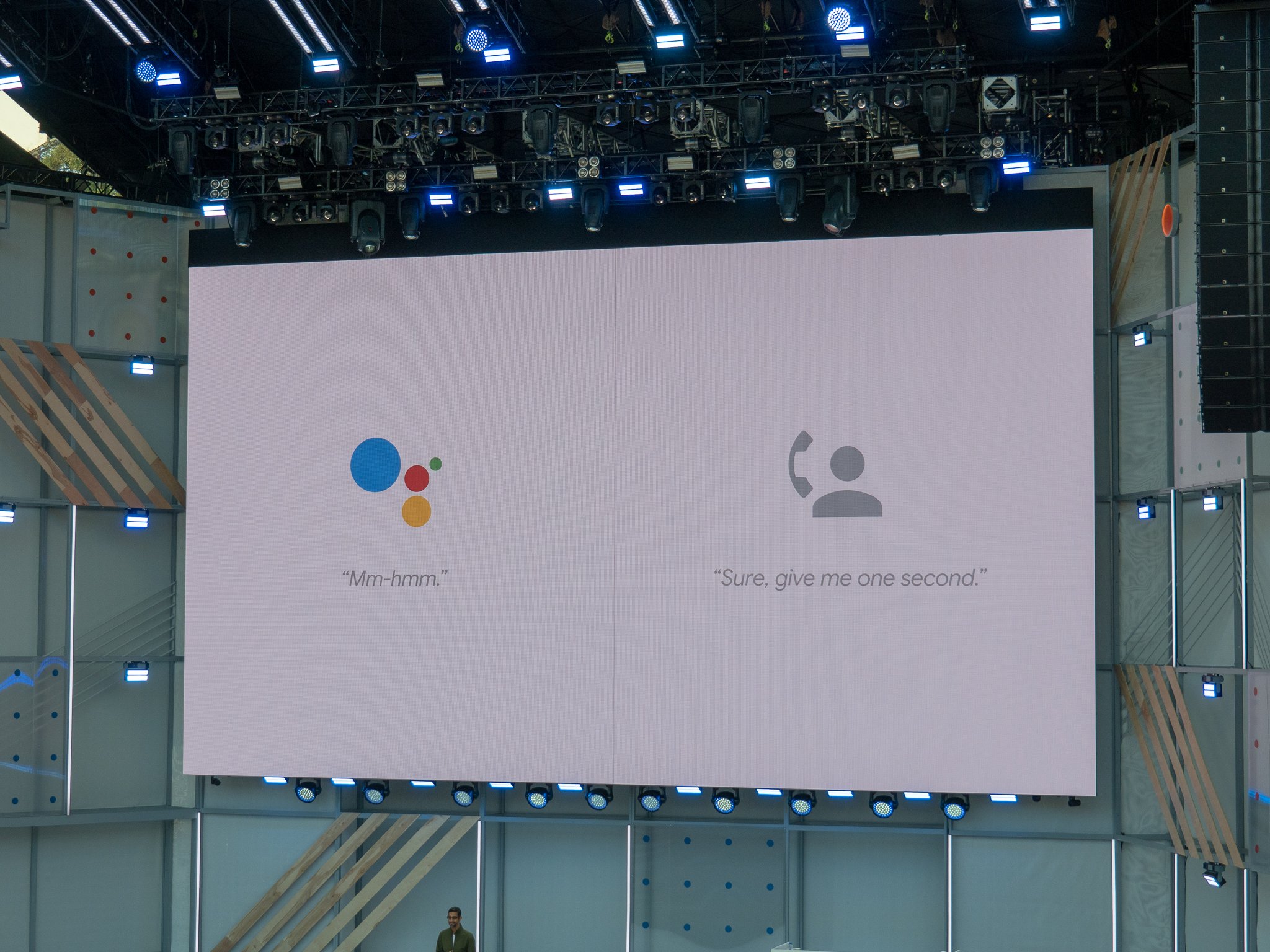

Maybe they are right. That doesn't really matter because perception is reality when it comes to things society fears. You might have seen the concern on the internet about Duplex. Duplex is what Google has done to make a computer talk and act like a real, live human over a phone call. A demo showing it make a hair appointment absolutely stunned the audience and many of us watching on YouTube. And rightfully so because a computer that knows to say "uh, wait" or "umm yeah" is a thing we have never seen before. The Google Home that initiates that call isn't doing anything "wrong," other than not saying, "Hey I am a computer. Is that OK?" to the person on the line and Google has addressed those specific concerns. But it's still ... strange.

Seeing a computer trick a person into thinking they are talking to another human is not a good look and will only lead to more fears of what all this AI stuff can do. Maybe not to you, but to someone. It was creepy, for lack of a better word. Creepy is not how you want to unveil something amazing to the world. Nobody wants to get a creepy phone call or feel uncomfortable about whether they're talking to a person or a computer.

Seeing Google Duplex was awesome. And creepy.

That's just the tip of the iceberg. We also saw AI take a YouTube video clip and isolate one voice from another, even when the two speakers were arguing and talking over each other. That was cool and not scary at all, but how it was accomplished might be. This happened because researchers at WavNet noticed a computer "learned" how to better single out one voice when it was given many voices at the same time. That wasn't expected or programmed — the computer did it by itself using the information it had stored. We have to wonder what else can happen by accident, and if anything already has that we're not hearing about.

Google has to be very up front with all of this information. We need to know what is being done, how it's being done and the outcome of it all. Not just the exciting bits and pieces that aren't concerning. And if there's nothing happening that shouldn't be, tell us that, too. There are already valid privacy concerns over this new technology and this is the first we've seen of it. We'll have more concerns the more we see.

People tend to worry, even when they don't have to. If Google doesn't do this the right way, someone will step in and force them to do it differently. If we want the future to be exciting and computers to do more, we want computer scientists and skilled developers doing it, not elderly white dudes in Congress.

0 Response to "You Can See More: Google needs to regulate how its computers talk to people, or the government will"

Post a Comment